As aircraft become more advanced and complex, pilots are tasked with monitoring and controlling increasingly sophisticated systems. This can lead to high mental workloads, sensory overload and impaired situational awareness. Researchers are exploring ways to augment human perception and cognition to address these challenges using emerging technologies like virtual and augmented reality, haptic feedback and others.

Introduction

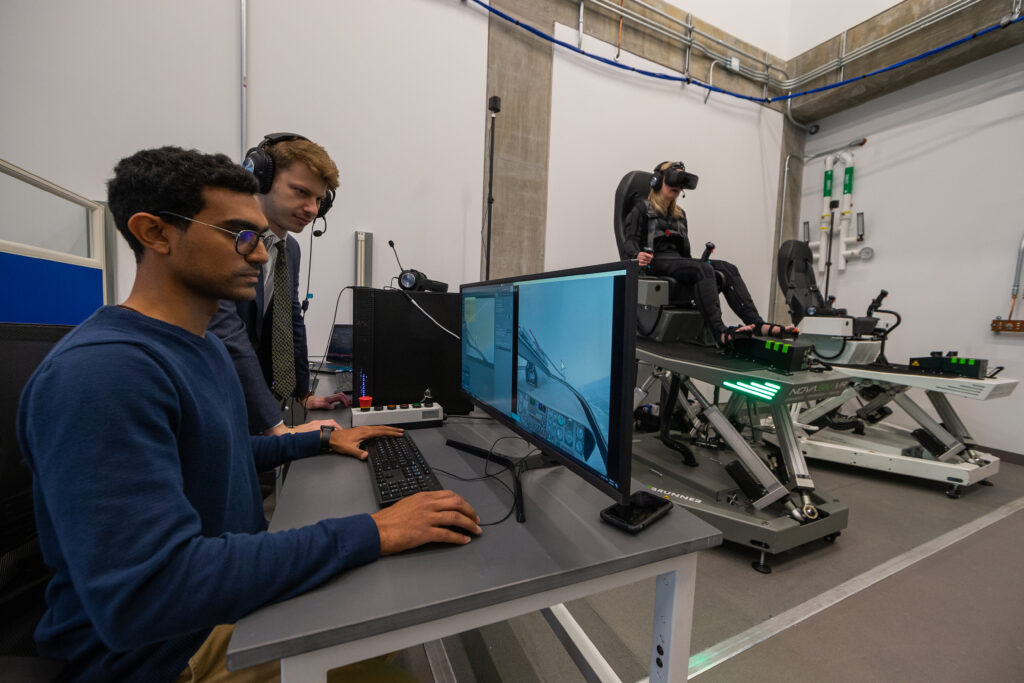

A team of researchers from the University of Maryland’s Extended Reality Flight Simulation and Control Lab recently conducted a study on using full-body haptic suits to provide haptic feedback cues to pilots. Their goal was to develop haptic cueing algorithms to augment pilots’ perception and improve their ability to control aircraft, especially in degraded visual environments.

The research focuses on closed-loop compensatory tracking tasks in which the pilot acts on the displayed error between a desired command input and the comparable vehicle output motion to produce a control action.

Historically, information about the error was presented to the pilot through visual displays. The idea behind this research is to replace and/or augment these visual displays with full-body haptic (tactile) displays.

Procedure

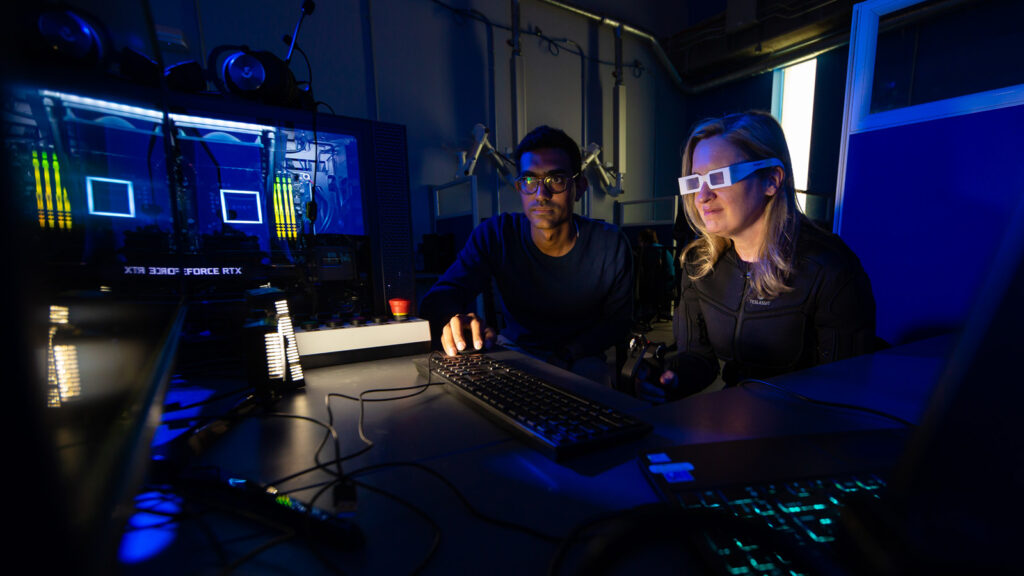

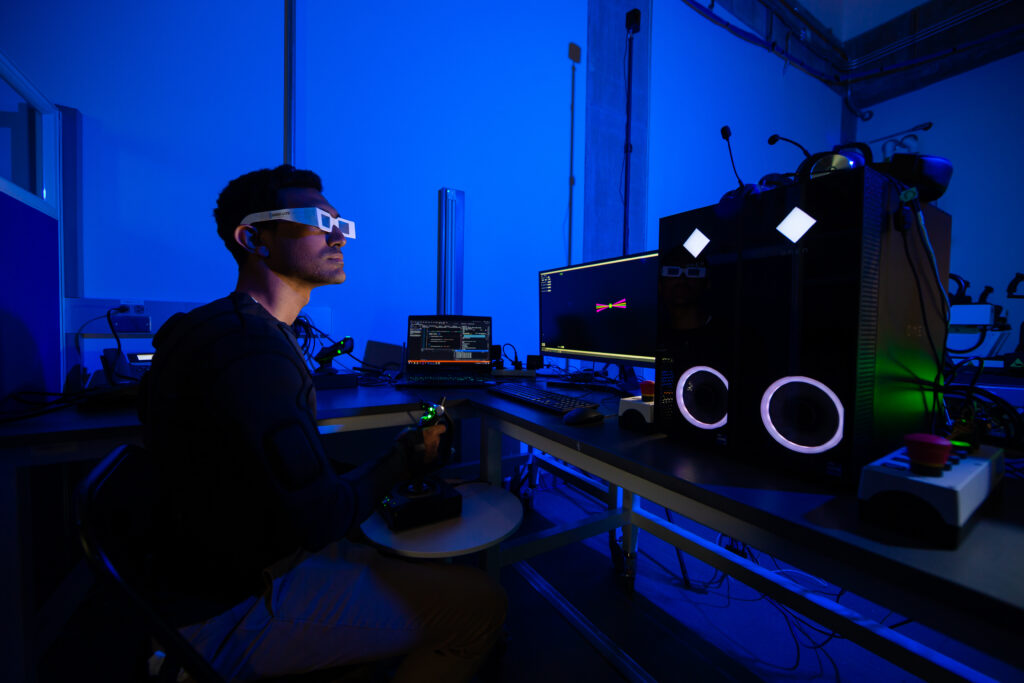

The researchers used the TESLASUIT, a full-body haptic suit, to provide localized electrical muscle stimulation (EMS) feedback to pilots. They developed haptic cueing algorithms based on proportional-derivative control of the error between the desired and actual aircraft motion. These algorithms were designed to cue the pilot on how much and in what direction they need to adjust the controls to minimize the error.

The controller used for tracking task is a Logitech X52 Pro joystick. The joystick has three degrees of freedom: left/right, fore/aft, and twist. The vision restricting devices (”foggles”) were used to limit the pilot’s field of vision.

The researchers tested three haptic cueing modalities: haptic feedback only, visual feedback only, and combined visual and haptic feedback. They also tested the combined feedback in both normal and degraded visual environments, simulated using foggles. Four test subjects, including one test pilot, participated in the experiments which involved compensatory tracking tasks where the subject had to control the aircraft to match a commanded roll angle.

Results

The results showed that full-body haptic cueing with PD control was able to provide satisfactory performance for the tasks when haptic feedback alone was used. The haptic feedback also improved performance, especially in degraded visual conditions, when combined with visual feedback. The test subjects were able to achieve similar or improved tracking error, stability margins, and crossover frequencies using the haptic feedback compared to visual feedback alone.

The test pilot reported that the haptic feedback felt natural and helped restore a sense of the tracking error and its rate of change, especially when vision was degraded. However, the pilot also noted an increased sensitivity to auditory noise and higher mental workload when using haptic feedback alone or combined with visual feedback compared to visual feedback alone. This was due in part to difficulty determining which sensory cues to prioritize when they were incongruent.

The researchers concluded that full-body haptic feedback shows promise for augmenting pilot perception and control, especially in situations where visual feedback is limited. However, more work is needed to refine the cueing algorithms, explore tasks with different levels of difficulty and dynamics, investigate audio cueing and interactions between multiple types of cues, and further quantify the effects on pilot workload. Overall, haptic technologies like the TESLASUIT could help enhance pilot situational awareness, reduce mental workload, and improve safety in the next generation of aircraft.

Photos provided by University of Maryland’s Extended Reality Flight Simulation and Control Lab

Related article: Up in the Air—Without Leaving the Ground